Topological Analysis of Neural Network Loss landscapes

Pankaj MeenaAbstract

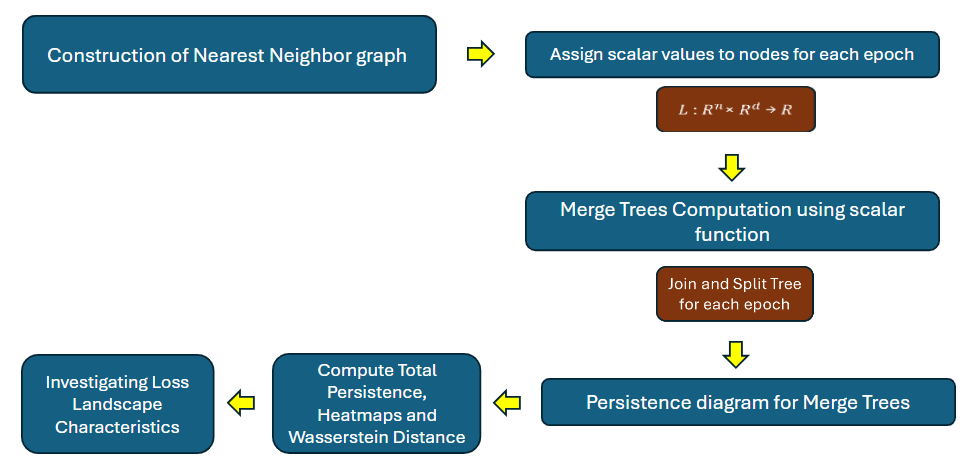

A neural network’s capacity to generalize effectively depends on crucial factors such as its initial learning rate, the regularization provided by weight decay, the choice of batch size for training, and the strategic timing of adjustments to the learning rate throughout the training phase. But how exactly do these factors affect how well the model can adapt to new situations? To find out, we need to dive into the inner workings of neural networks. In this project, we’ll take a deep dive into the network’s ”landscape” of loss in high dimension, examining how it changes over time. By carefully examining varying initial learning rates cases on WideResnet-16, we aim to decipher the factors influencing differing levels of generalization. By scrutinizing how these factors affect the model’s ability to adapt to new data, we seek insights into optimizing its performance across various tasks.[PDF]